Other

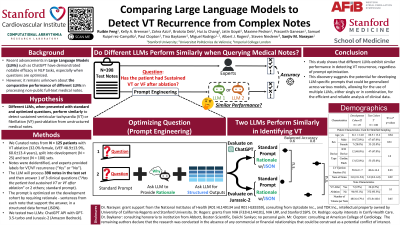

Comparing Large Language Models to Detect VT Recurrence from Complex Notes

Kelly Brennan, None – None, Stanford University; Zahra Azizi, None – None, Stanford University; Brototo Deb, None – None, Stanford University; Hui Ju Chang, None – None, Stanford University; Prasanth Ganesan, None – None, Stanford University; Jatin Goyal, None – None, Stanford University; Maxime Pedron, None – None, Stanford University; Samuel Ruipérez-Campillo, None – None, Stanford University; Paul Clopton, None – None, Stanford University; Tina Baykaner, None – Assistant Professor, Stanford University; Albert Rogers, None – Instructor, Stanford University; Sanjiv Narayan, None – Professor, Stanford University

Purpose: Recent advancements in Large Language Models (LLMs) such as ChatGPT have demonstrated notable efficacy in complex natural language tasks, especially when questions are optimized (prompt engineering). However, it remains known about the comparative performance of different LLMs, both with and without prompt engineering, in processing non-public full-text medical notes. This study aims to test the hypothesis that different LLMs, when presented with standard and optimized prompts, perform similarly to detect sustained ventricular tachycardia (VT) or ventricular fibrillation (VF) post-ablation from unstructured medical notes. If supported, the implication is that LLMs use similar base logic.

Material and Methods: We curated notes from N = 125 patients with VT ablation (32.0% female, LVEF 48.9±13.9%, 60.6±13.4 years), split into development (N = 25) and test (N = 100) sets. Notes were deidentified, and experts provided ground truth labels for VT/VF recurrence (“Yes” or “No”). The LLM will process each note in the test set (N=398 notes) and then answer 1 of 3 clinical questions (“Has the patient had sustained VT or VF after ablation” or 2 others; standard prompt). We also optimized the prompt by requiring rationale - sentences from each note that support the answer, in a structured data format (JSON). Two different LLMs were tested: ChatGPT API with GPT-3.5-turbo and Jurassic-2 (Amazon Bedrock). We chose LLMs that preclude using clinical data for further training, mitigating ethical concerns.

Results: Across 3 clinical questions, ChatGPT and Jurassic-2 with the standard prompt achieved a similar balanced accuracy of 52.7%±2.0% and 50.0% ± 0.0% (Net Reclassification Improvement (NRI)=-0.05, p=0.574). With the optimized prompt, accuracy rose to 84.2% ± 3.5% (NRI=1.06, p< 0.001; vs standard) for ChatGPT and 75.6% ± 3.1% (NRI = 1.12, p< 0.001; vs standard) for Jurassic-2. ChatGPT showed marginally better performance, but this was not statistically significant (NRI = 0.01, p=0.957).

Conclusions: This study shows that different LLMs exhibit similar performance in detecting VT recurrence, regardless of prompt optimization. This discovery suggests the potential for developing LLM-specific prompts that could be generalized across various models, allowing for the use of multiple LLMs, either singly or in combination, for the efficient and reliable analysis of clinical data.

Material and Methods: We curated notes from N = 125 patients with VT ablation (32.0% female, LVEF 48.9±13.9%, 60.6±13.4 years), split into development (N = 25) and test (N = 100) sets. Notes were deidentified, and experts provided ground truth labels for VT/VF recurrence (“Yes” or “No”). The LLM will process each note in the test set (N=398 notes) and then answer 1 of 3 clinical questions (“Has the patient had sustained VT or VF after ablation” or 2 others; standard prompt). We also optimized the prompt by requiring rationale - sentences from each note that support the answer, in a structured data format (JSON). Two different LLMs were tested: ChatGPT API with GPT-3.5-turbo and Jurassic-2 (Amazon Bedrock). We chose LLMs that preclude using clinical data for further training, mitigating ethical concerns.

Results: Across 3 clinical questions, ChatGPT and Jurassic-2 with the standard prompt achieved a similar balanced accuracy of 52.7%±2.0% and 50.0% ± 0.0% (Net Reclassification Improvement (NRI)=-0.05, p=0.574). With the optimized prompt, accuracy rose to 84.2% ± 3.5% (NRI=1.06, p< 0.001; vs standard) for ChatGPT and 75.6% ± 3.1% (NRI = 1.12, p< 0.001; vs standard) for Jurassic-2. ChatGPT showed marginally better performance, but this was not statistically significant (NRI = 0.01, p=0.957).

Conclusions: This study shows that different LLMs exhibit similar performance in detecting VT recurrence, regardless of prompt optimization. This discovery suggests the potential for developing LLM-specific prompts that could be generalized across various models, allowing for the use of multiple LLMs, either singly or in combination, for the efficient and reliable analysis of clinical data.